A lot of artists are exploring AI and posting their results all over social media but very few are creating complete pieces.

I’ve been following longtime NYC Creative Director’s (https://www.kingboss.tv/) posts on LinkedIn about the progress of his all-AI animated short, The Moon’s Tooth. Being a fan of his work since we worked together at the now defunct Post Perfect, I asked him to be a panelist on our AI and Animation panel last month. His insights on the process were both edifying and fascinating.

In case you didn’t make it to the panel, we’ve asked him to answer a few questions about the AI process, the various large language models, and the pros and cons, for our newsletter.

This Q&A was conducted via email

Congratulations on finishing your first AI animation. Can you tell us a bit about how it came to be and why you chose AI to bring it to life?

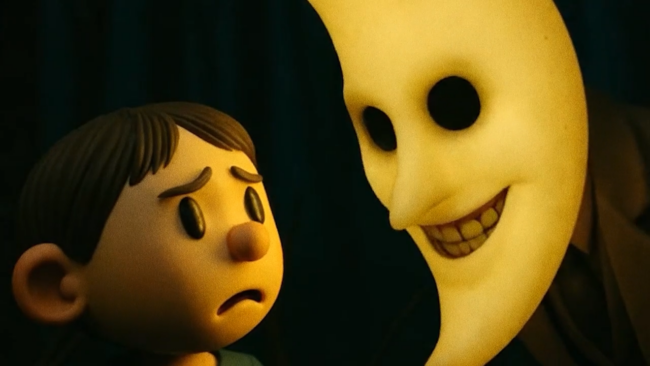

I wrote “The Moon’s Tooth” over 10 years ago with the intention of filming it in stop motion. At the time I was working with Bent Image Lab in Portland OR, and was very inspired by their process and artists. My friend Jenna Rose Roberts helped me translate the story to a working screenplay, and artists Odessa Sawyer and Nico Teitel provided the initial concept designs for the characters.

I’ve self funded a number of films, but never got around to making this one. The script sat in my doom pile for years until I decided to try and visualize a few scenes in AI. The results weren’t bad so I expanded and storyboarded an abridged version of the film.

It sat as storyboards for another year until the technology matured enough to be able to generate coherent animated scenes using the various video generators. It wasn’t my intention to make the film in AI. The generation was simply a low cost visualization tool. I’m happy with the results but nothing beats actually shooting and animating the project. Some sections are still rough but I decided to call it done. It’s basically a snapshot of where the technology was in August 2025

During the panel discussion, you talked about using many different AI LLMs, or are they called Models?, during production. Can you give us some details on why and the pros and cons of each.

Each AI model has it’s unique characteristics. That’s why we’ve seen companies including Adobe and Runway aggregating different models into their services. Veo, for example, tends to do better with Live Action. Sora and Luma do better with animation. Midjourney is tops in terms of getting cinematic images because it’s trained on high quality material.

Personally I’m a fan of the Chinese models like Wan and Seeddance. They are well rounded, and are a fraction of the cost.

This all changes weekly so there’s no true “guide” to go by. You just have to play with it and keep up with the tech.

How did you keep the character design consistent from shot to shot?

Most models have solved the consistency problem. You can get fairly good results through a reference, and descriptive prompt. Lora’s are no longer needed. It helps if your character has certain distinguishing characteristics like “red glasses” or “yellow boots” . These will go a long way in establishing visual throughlines even if the render falls a little short.

Usually in animation we have very little outtakes because of the amount of planning. I’m guessing that’s not the case when using AI.

In AI you can make make blooper reels.

Oftentimes you have to generate a shot 20 – 30 times to get it something usable so editing becomes important…much like in a live action shoot. The difference is that if you don’t have all the shots you need, or if you need additional footage, you can simply generate more instead of having a reshoot.

What do you think about the future of AI in animation? Will it become just another tool in the toolset? Will it replace artists? Will it replace studios?

Artists (from production designers to animators) are irreplaceable. Many AI forward agencies and studios are starting to find that out. If you know your craft you can guide the process and create a cohesive story that’s just as good as anything made by traditional means. Although at AI’s current state it might require just as much work.

The iPhone did not replace SLRs but it did change photography. And it changed the world! I do see it as a tool…much like your phone is a multifaceted tool. AI has changed the industry and will continue to do so. We are just at the beginning .